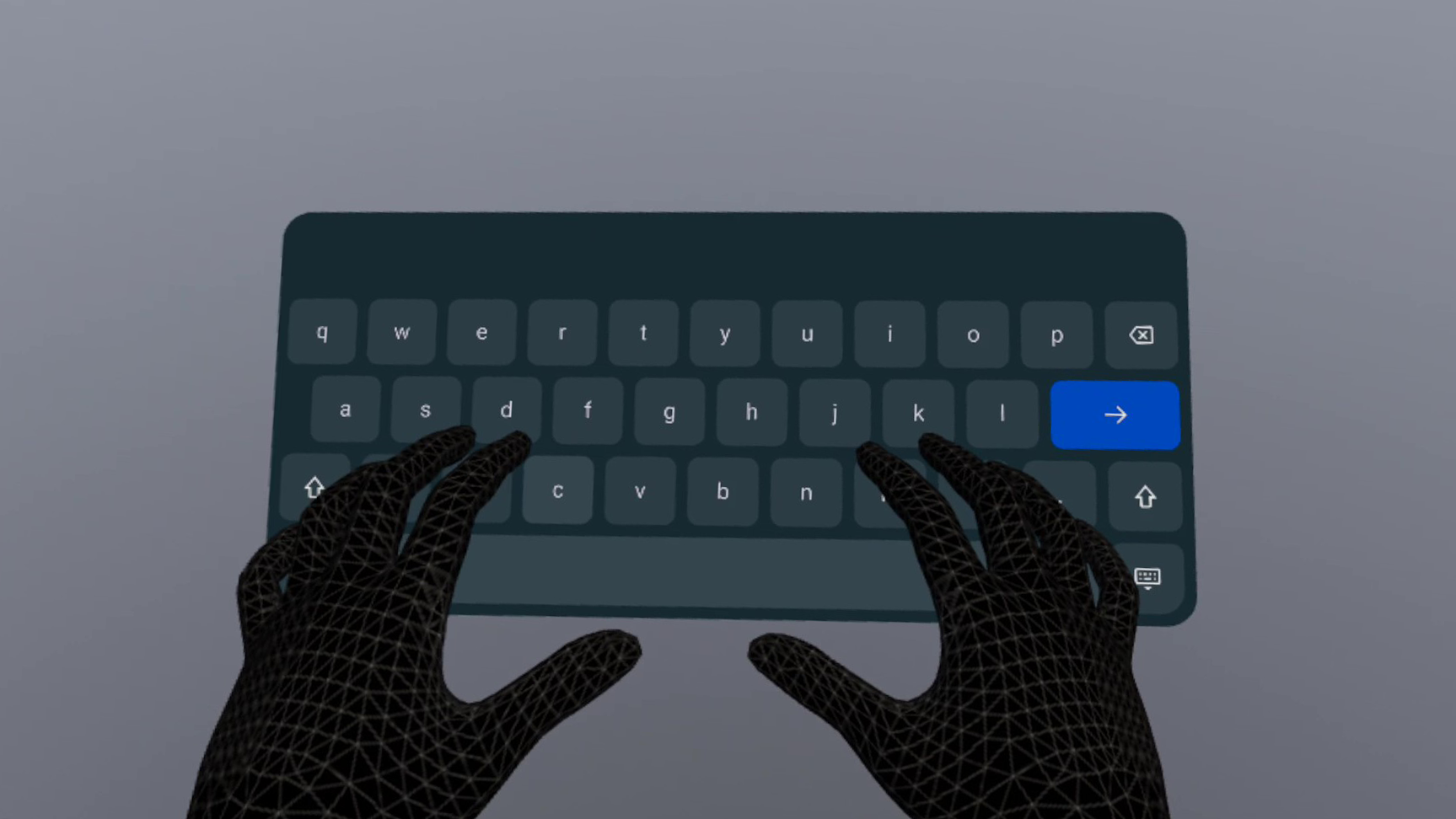

Quest’s new virtual keyboard neatly integrates into apps instead of just being a crude overlay.

If you develop an app for smartphones, you don’t have to also build a touchscreen keyboard. The operating system handles that for you. In VR and AR this isn’t quite so simple, since a virtual keyboard is an object in three dimensional space.

Building a virtual keyboard from scratch, especially one that handles accent marks for different languages, is a significant investment of time and effort, not to mention it means the user has an inconsistent text entry experience between different apps.

On Quest, developers have been able to bring up the virtual keyboard used by Meta’s system software since mid-2020. But this appears as a crude overlay in a fixed position, rendering above the app no matter where other virtual objects are and replacing their in-app hands with the translucent system “ghost hands” until they’ve finished typing. It feels completely out of place.

Meta’s new Virtual Keyboard for Unity solves these problems. Instead of just being an API call to an overlay, it’s an actual prefab developers position in their apps. Virtual Keyboard works with either hands or controllers, and developers can chose between close-up Direct Touch mode or laser pointers at a distance.

The operating system handles populating the surface of Virtual Keyboard with keys for the user’s locality, meaning the keyboard will get future features and improvements over time even if the developer never updates the app.

Virtual Keyboard was introduced as an experimental feature in April – so couldn’t be shipped to the store or App Lab – but in the v54 SDK released this week it has now graduated to being a production feature.