Researchers can take an image and use it as a reference point to create a virtual world, object, or person.

As companies explore having a metaverse presence through a digital twin, the ability to quickly and easily build out stylized 3D content and virtual worlds will only become more important moving forward.

A recently published Cornell University paper explored this growing trend and developed a solution for producing stylized neural radiance fields (SNeRFs) that can be used to create a wide range of dynamic virtual scenes at greater speeds than traditional methods.

Using various reference images, the research team of Thu Nguyen-Phuoc, Feng Liu, and Lei Xiao were able to generate stylized 3D scenes that could be used in a variety of virtual environments. For example, imagine putting on a VR headset and viewing how the real world would look through a stylized lens such as a Pablo Picasso painting.

This process allows the team to not only create virtual objects quickly but utilize their real-world environment as part of the virtual world with 3D object detection.

It is important to note that the research team was also able to observe the same object via different view directions at the same viewpoint, otherwise known as cross-view consistency. This creates an immersive 3D effect when viewed in VR.

By alternating the NeRF and stylization optimization steps, the research team was able to take an image and use it as a reference style to then recreate a real-world environment, object, or person in a way that adapts the stylization of that image, thereby speeding up the creation process.

“We introduce a new training method to address this problem by alternating the NeRF and stylization optimization steps,” said the team. “Such a method enables us to make full use of our hardware memory capacity to both generate images at higher resolution and adopt more expressive image style transfer methods. Our experiments show that our method produces stylized NeRFs for a wide range of content, including indoor, outdoor and dynamic scenes, and synthesizes high-quality novel views with cross-view consistency.”

Because of the memory limitations of NeRF, the researchers also had to solve another problem of how they could render more hi-def 3D imagery at a rate of speed that felt more like real-time. The solution was to create a loop of rendered views that with each iteration was able to target stylization points more consistently with each passing and then rebuild the image with more detail.

The technology also improved avatars. The research team’s SNeRF stylized approach let them create an avatar that was more expressive during conversations. The result is dynamic 4D avatars that can realistically convey emotions such as anger, fear, excitement, and confusion, all without having to use an emoji or press a button on a VR controller.

The research work still continues, but at the moment the team was able to develop a method for 3D scene stylization using implicit neural representations that impacted their environment and their avatars. Additionally, their approach of using an alternating stylization method allowed them to take advantage of the full use of their hardware memory capability to stylize both static and dynamic 3D scenes, allowing the team to generate images at higher resolution and adopt a more expressive image style transfer methods in VR.

If you’re interested in digging deep into the details, you can access their report here.

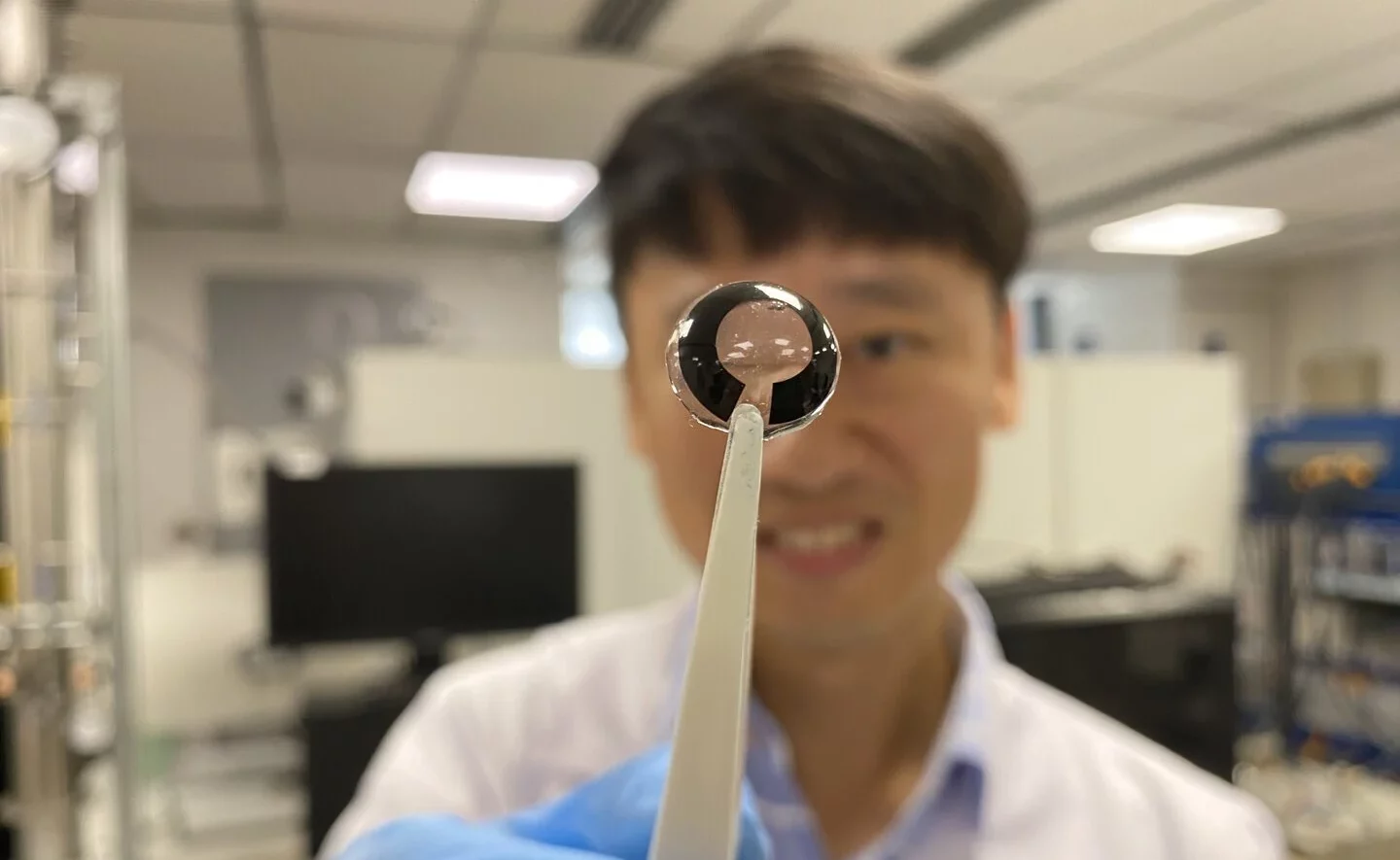

Image Credit: Cornell University

The post This Technology Can Turn The Real-World Into Living Art appeared first on VRScout.