Meta AI will be available on Quest headsets in the US and Canada as an experimental feature in August, and will include the Vision capability on Quest 3.

Meta AI is the company’s conversational AI assistant powered by its Llama series of open-source large language models (LLMs). In the US & Canada, Meta AI is currently available in text form on the web at meta.ai or in the WhatsApp and Messenger phone apps, and in audio form on Ray-Ban Meta smart glasses via saying “Hey Meta”.

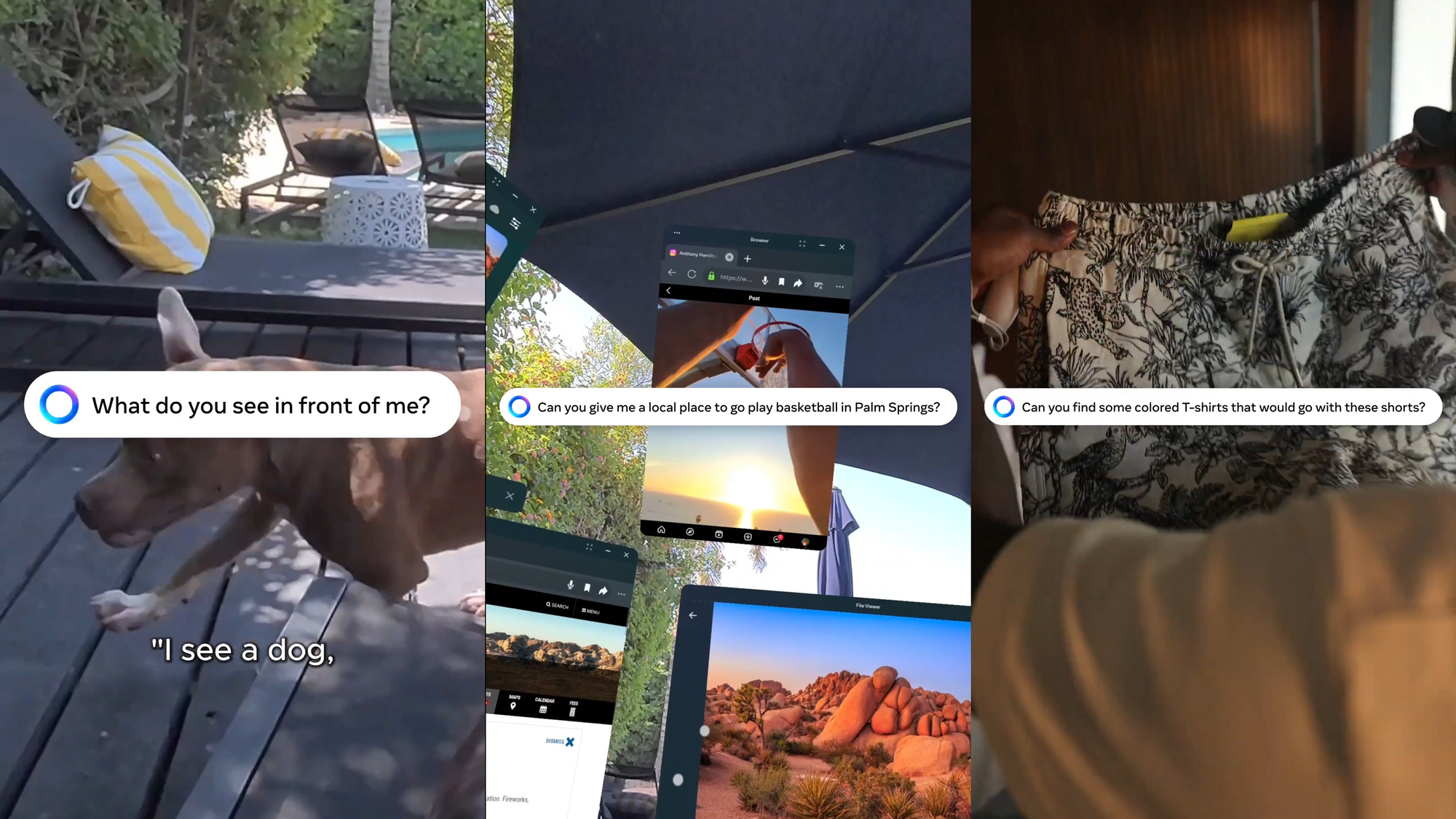

Since April Meta AI has been multimodal on the Ray-Ban glasses, meaning it can answer questions about what you’re looking at by capturing and analyzing an image from the cameras.

This capability is branded as Meta AI with Vision, and it will also be available on Quest 3 via its passthrough cameras “to start”, suggesting it might also be able to see virtual objects and immersive VR at some point in the future. Notably, third-party developers can’t access the passthrough cameras, so other companies currently couldn’t launch a competing visual AI on Quest.

Meta AI will also be available on Quest 2, but it won’t include the Vision feature, meaning it will be limited to voice input only.

On both headsets Meta AI will be able to access real-time information via Bing, such as sports scores and weather.

0:00

Footage of Meta AI on Quest 3 from influencer Anthony Hamilton Jr.

Meta says Meta AI will replace the existing Voice Command system on Quest, which will be deprecated in the near future.

Voice Commands were only available in the US and Canada too, and there’s no timeline for Meta AI to expand to other countries either. Meta recently confirmed it won’t be releasing multimodal Llama models in the European Union due to “the unpredictable nature of the European regulatory environment”, though said it will release them in the UK due to greater regulatory certainty. For now though, Meta simply says it will “continue to improve the experience over time”.