The new Apple Pencil Pro would make a perfect accessory for Apple Vision Pro.

Alongside new iPad Pro and iPad Air models, today Apple announced the $130 Apple Pencil Pro for use with them.

0:00

The new Apple Pencil Pro.

In addition to the pressure sensing and double tap detection of the existing second-generation Apple Pencil, the new Pro model has a gyroscope, squeeze sensor, and haptic feedback. While Apple didn’t say anything about it supporting any device other than the new iPads it just announced, these features would make it an ideal controller for Apple Vision Pro.

It Makes Perfect Sense

Vision Pro and visionOS are designed around the combination of precise eye and controller-free hand tracking, fused for a “gaze and pinch” input system that at times feels almost telepathic. When announcing the headset, Apple boasted that “it’s so precise, it completely frees up your hands from needing clumsy hardware controllers”.

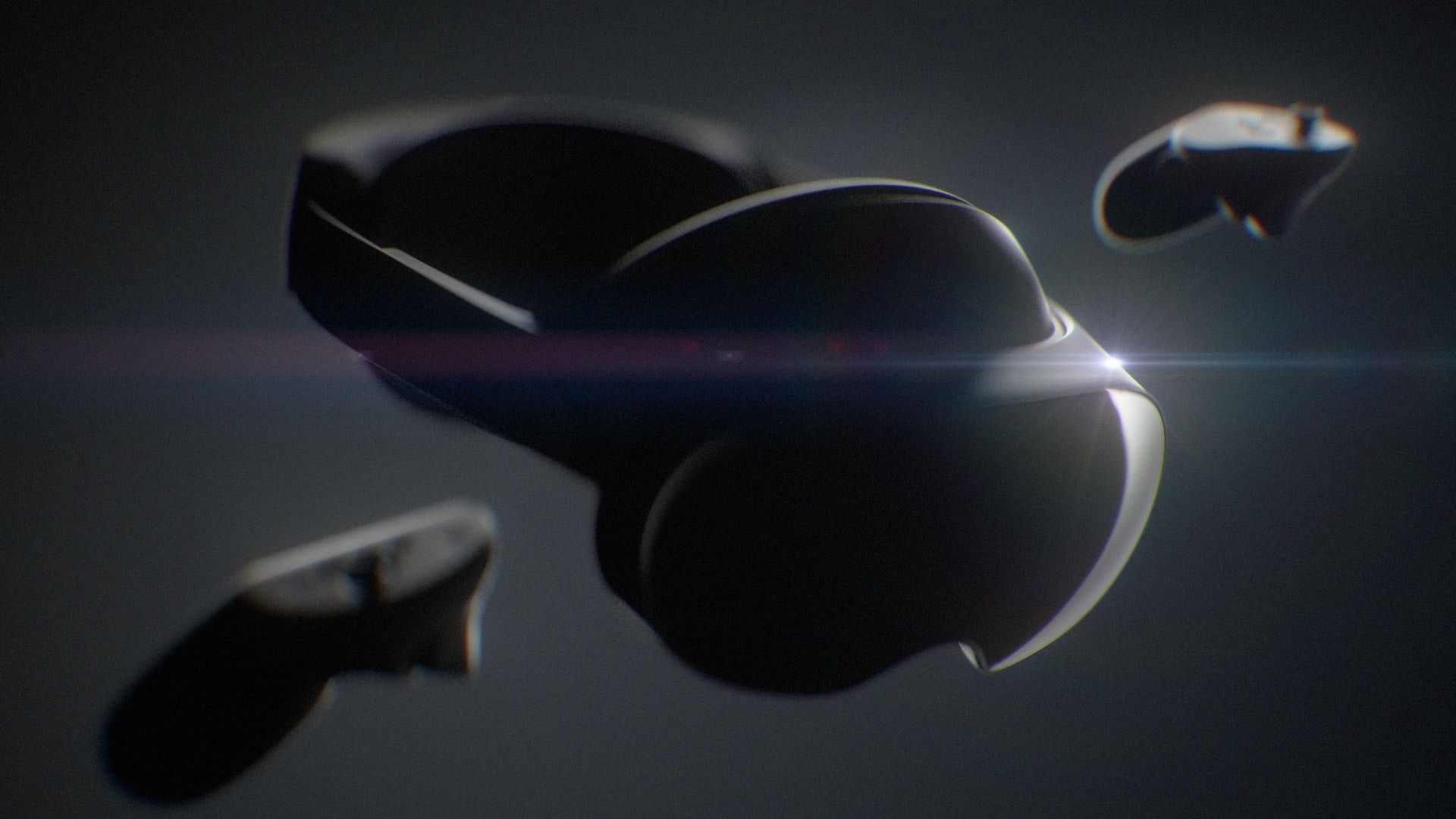

Statements like these make it clear that Apple won’t be shipping a gamepad split in half, the dominant form of input for every other major AR/VR platform, any time soon. But the lack of tactility of visionOS’s default input paradigm severely limits its usefulness for the creative and professional use cases iPad Pro is marketed for and Sony is hoping to target with its upcoming standalone headset.

Given Apple is already strongly focusing on enterprise with Vision Pro, a prudent strategy given the headset’s price point, will it really be content to cede the segment of the market that requires a precision input device?

Supporting Apple Pencil Pro in visionOS could be Apple’s strategy to make the platform suitable for both 2D and 3D precision creativity. Theoretically, Apple could let you turn any desk into a massive drawing or design surface or turn the space around you into a spatial canvas for modeling, animation, 3D painting, and more.

It Could Work On A Technical Level

Vision Pro is already capable of skeletal hand and object tracking, albeit with a low update rate. Tracking a bright white object of known dimensions that the user is holding within their already-tracked hand doesn’t seem like too much of a stretch. Especially given this object has a gyroscope onboard, so its orientation is known. And if like almost every other consumer electronic device this gyroscope is in an IMU (inertial measurement unit) which also contains an accelerometer, the tracking quality could be almost as good as a dedicated VR controller.

For 3D creativity, the squeeze sensor and double tap sensors would essentially give Apple and its developers a trigger and button to work with. Double tap to bring up a contextual menu, for example, or squeeze to draw or sculpt in 3D space. Meanwhile, haptic feedback would make this feel great instead of lifeless.

For 2D surfaces like your desk, fusing the input from the pressure sensor with pencil tracking and LiDAR meshing could allow Apple to calibrate virtual content to appear locked precisely to the height and orientation of the desk. The biggest iPad Pro gives you a 13-inch drawing surface, but Vision Pro could one day give you a limitless canvas for creativity.

But Is It Actually Happening?

In March MacRumors cited “a source familiar with the matter” as saying Apple had internally tested a future Apple Pencil with Vision Pro, though the outlet didn’t go into further details.

That Apple didn’t tease this today doesn’t mean it won’t happen. Today’s event was focused on iPads, and adding Pencil Pro support to Vision Pro would require significant changes to visionOS. If this is coming, Apple would likely announce or tease it at WWDC, its annual event where it showcases upcoming improvements to its operating systems.

Apple previously confirmed that WWDC24 will take place June 10 and include “visionOS advancements”. We’ll be watching closely for any mention or tease of Apple Pencil Pro.