Apple explained the three ways to enter text in Vision Pro.

One of the key revolutionary features of the iPhone was to deliver rapid text entry without a physical keyboard – something existing VR & AR headsets have so far failed to achieve with floating virtual keyboards.

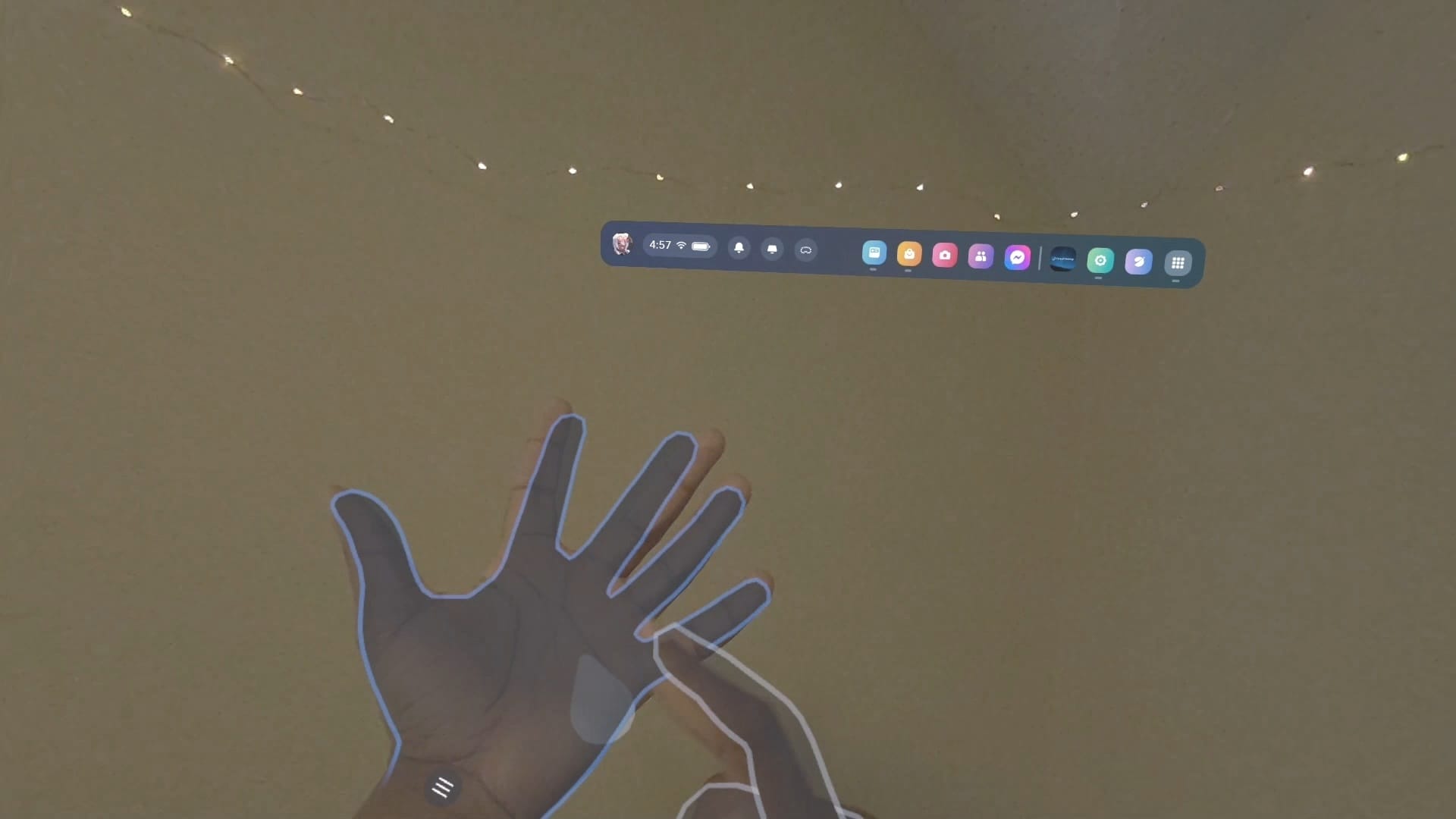

Holding tracked controllers precludes the type of rapid multi-finger movements needed to type on a keyboard – physical or real – and up until very recently Meta’s approach to text entry with controller-free hand tracking on Quest had you pointing at and pinching keys from a distance, painfully slowly.

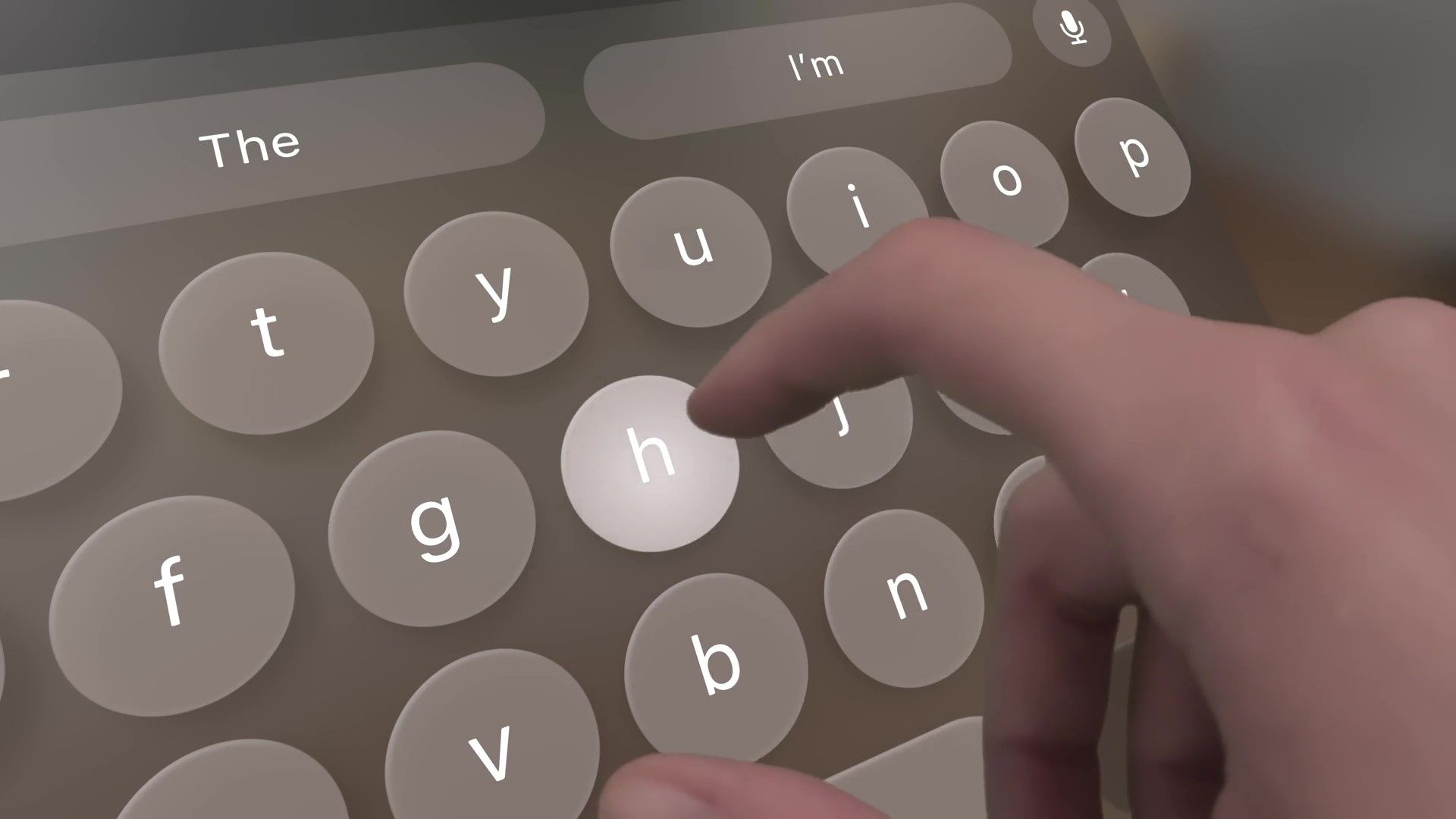

In February Meta released an “experimental” feature called Direct Touch, letting Quest users directly tap the interface, including the virtual keyboard, with their hands. Apple’s visionOS takes this same approach as its primary text entry method, and interestingly Apple calls this direct touch too.

Unlike Quest however, Vision Pro features depth sensors which should make for noticeably higher quality hand tracking, and as you’d expect Apple has refined the software experience with polish and subtle details not present in Meta’s solution.

The virtual buttons hover slightly above the pane “to invite pushing them directly”, an approach Google’s Owlchemy is taking too. Hovering over a button with your finger highlights it, and this highlight gets brighter the closer you get to “help guide the finger to the target”.

Pressing the button triggers an instant animation and sound effect spatialized to the key’s location.

Of course, this keyboard still lacks haptic feedback and holding your hands up in the air for an extended period of time can be fatiguing. So visionOS also supports two other text entry methods: Apple’s Magic Keyboard, and voice entry.

Apple describes pairing its Magic Keyboard as “great for getting things done”. It didn’t disclosure whether other keyboards are supported, or if there’s an automatic pairing system for Magic Keyboard.

Vision Pro has six microphones, so voice typing should have high quality input even in noisy environments.

One possible solution for text entry Apple didn’t discuss would be to pin the virtual keyboard to a real surface like your desk, giving you free haptic feedback and the ability to rest your hands. This should be possible for developers to build with ARKit’s scene understanding tools though, and we expect some will try this approach.

How well the floating virtual keyboard on visionOS works will depend on just how good the hand tracking is on Vision Pro. Text entry wasn’t something we got to try in our hands-on time, but it’s something we’ll be keen to test out as soon as we get our hands on the device.