Today at WWDC, Apple announced Apple Vision Pro, an upcoming AR/VR headset.

Apple CEO Tim Cook announced Vision Pro just minutes ago as “one more thing” at the end of its WWDC 23 keynote presentation. He described the headset as “a revolutionary new product” made to power “an entirely new AR platform.”

Vision Pro will ship in early 2024 in the US, available through Apple online or in-store starting from $3500. Apple says Vision Pro will ship to more countries “later next year.”

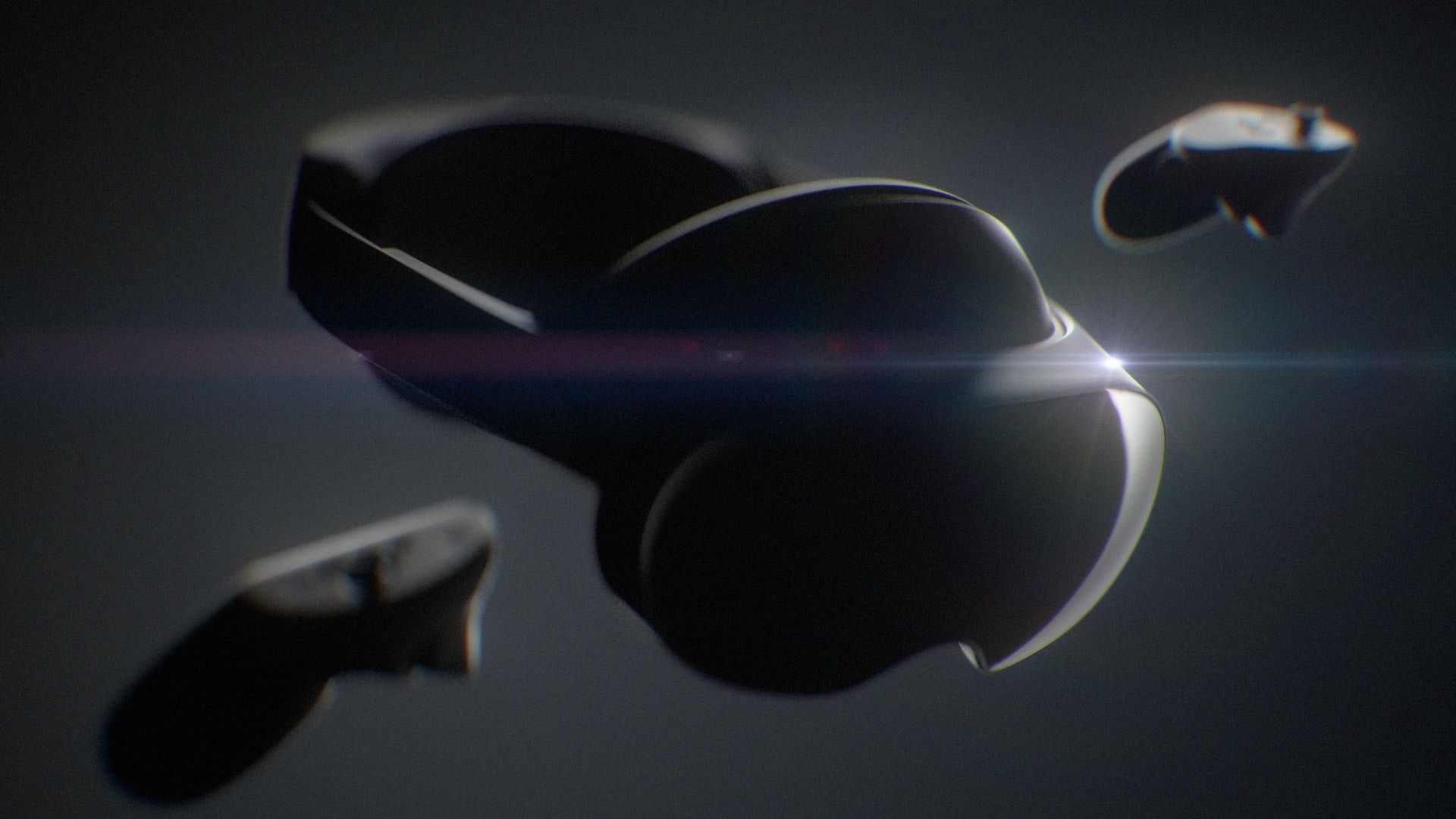

Apple Vision Pro

Tim Cook described it as the “First Apple product you look through and not at.” According to Apple, Vision Pro is “a revolutionary spatial computer that seamlessly blends digital content with the physical world.”

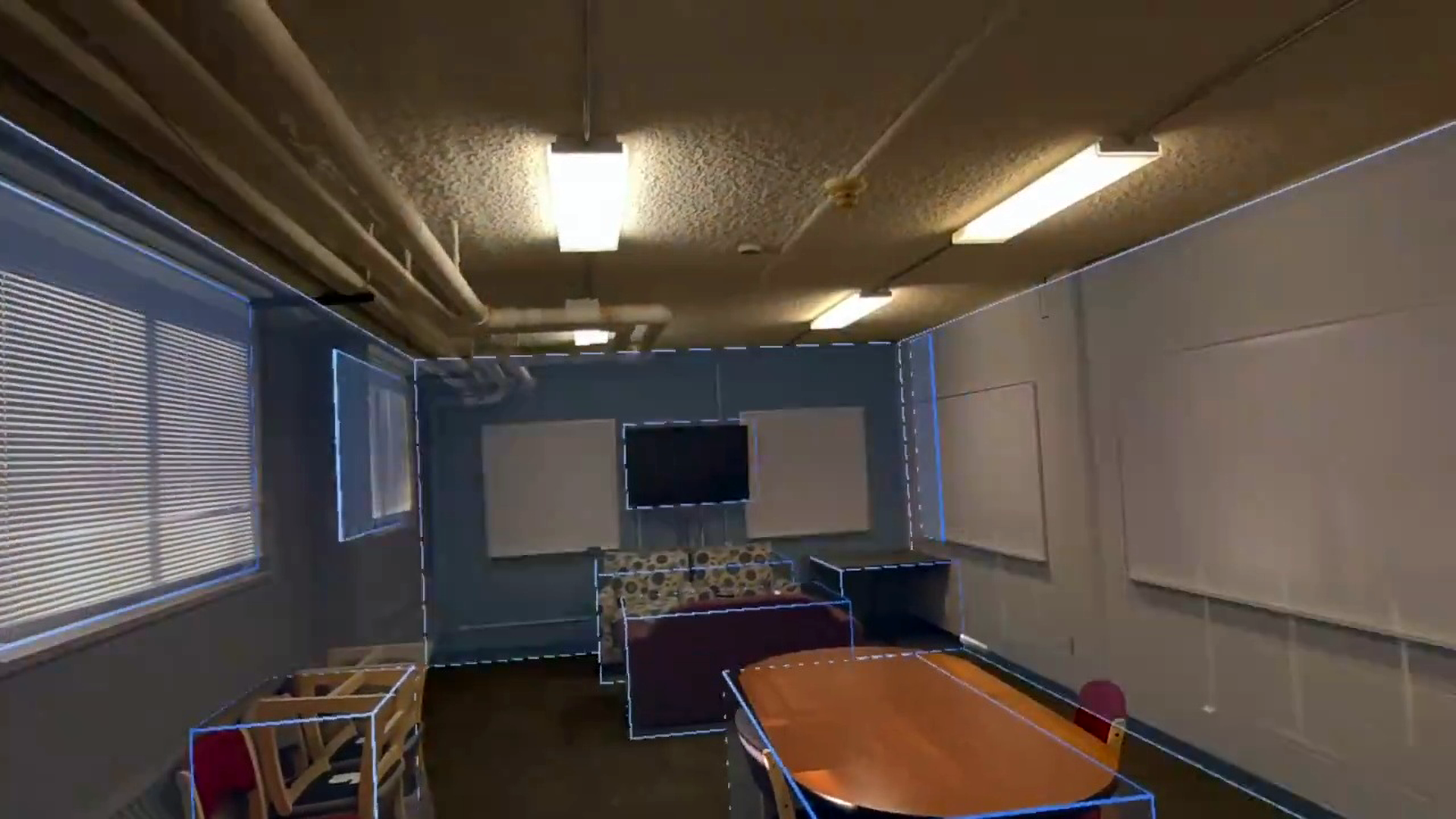

The headset allows place apps around a representation of their real environment. “See, hear and interact with digital content just like it’s in your space,” described Tim Cook.

The headset features a slim and sleek design, with an outward-facing display that can show a live view of the user’s eyes on the exterior using a feature called ‘EyeSight’.

The front of the headset is made of one singular piece of glass, connected to an aluminum alloy frame. The facial interface, described by Apple as ‘Light Seal’, “comes in a range of shapes and sizes” and made of a soft textile.

The strap, or ‘Head Band’, is “three-dimensionally knitted as a single piece to provide cushioning, breathability, and stretch” and features a dial to adjust its fit against your head. Like the Light Seal, it will be available in multiple sizes and the the strap is detachable from the side of the headset, which Apple says makes it “easy to change to another size or style of band.”

The headset features an external wired battery pack, which Apple says will give you up to 2 hours of power when in use. The headset can also alternatively be plugged into a power outlet for all day use.

There’s also an Apple Watch-like dial on the side of the headset – a ‘digital crown’ that lets you blend between full AR, 180 degrees of VR (with an AR view behind you), to full VR.

Powered by Apple Silicon

Vision Pro features “a unique dual-chip design” that includes Apple’s M2 Macbook-tier processor and a new R1 chip that processes input from the headset’s 12 cameras, five sensors, and six microphones.

The headset also features a “high-performance eye tracking system” using LEDs and infrared cameras within the headset, which will allow users to input without any controllers and “accurately select elements just by looking at them.”

Vision Pro also includes a LiDAR scanner and TrueDepth camera, which the headset uses to “create a fused 3D map of your surroundings” onto which it accurately render content.

visionOS

“Just as the Mac introduced us to personal computing, and iPhone introduced us to mobile computing, Apple Vision Pro introduces us to spatial computing,” said Tim Cook.

It runs a new operating system developed by Apple called visionOS, which it says is the first operating system “designed from the ground up for spatial computing” and the “start of an entirely new platform.”

It will feature a user interface “controlled by the most natural and intuitive inputs possible — a user’s eyes, hands, and voice.”

Eye tracking and hand tracking support will allow users to “browse through apps by simply looking at them, tapping their fingers to select, flicking their wrist to scroll, or using voice to dictate.”

visionOS will include its own App Store, offering users custom-built apps for Vision Pro, alongside “hundreds of thousands” of compatible iPhone and iPad apps that “automatically work with the new input system for Vision Pro.”

Users will be able to position apps around a representation of their environment using a “three-dimensional interface that frees apps from the boundaries of a display so they can appear side by side at any scale.”

Vision Pro will support Magic Keyboard and Magic Trackpad, while also allowing users to display and control their Mac within the headset via a virtual 4K display.

Apps like FaceTime will allow users to position those on a call around the environment in life-sized tiles and use spatial audio to position their voices correctly. Those joining a FaceTime call via Vision Pro will be presented to other users by a ‘Persona’ – a “digital representation” of the user “created using Apple’s most advanced machine learning techniques” that reflects your face and hand movements in real time.

This story is developing – more details to follow.