The most technically impressive VR demo I tried at GDC 2023 felt familiar.

I passed objects back and forth between my hands, tossed them into the air and caught them, twisted dials, grabbed and used spray bottles and typed on a keyboard by pinching one floating letter at a time. I interacted with my squishy surroundings like a toddler at the daycare just like I have for the better part of the last decade in Owlchemy’s Job Simulator on every major VR system, including Vive, Rift, Quest, and PSVR.

The software from Google’s Owlchemy Labs ran on Quest 2. Instead of interacting with tracked controllers, I used Meta’s latest Hand Tracking 2.1 technology with Owlchemy’s fine tuning layered on top. I came out of my demo and asked Andrew Eiche, the new CEO of Owlchemy Labs, if he thinks they’ve achieved the full interaction experience of Job Simulator with only hand tracking.

“I think we got it,” Eiche says. “This demo that we’re giving you here feels a lot like what 2015 HTC Vive at GDC demo was.”

Hand Tracking & Mass Market VR Games

“How do you get a billion people to play VR?” Eiche asks. “The controllers are a barrier. They are built like video game controllers.”

Those words echo those of Eiche late last year, and a bunch of VR gamers reading this are probably tapping away at their keyboards already, enjoying that satisfying haptic feedback as they outline exactly how Eiche is wrong. If that’s you, please take a minute to get to the end of this piece so you can grasp the rest of this glimpse of the future Eiche offered me.

Eiche started at Owlchemy in 2015 and just moved from technical lead to overall lead at a company responsible for some of the highest selling VR games across every single platform they’re on. While Owlchemy’s subsequent games have grown in complexity, adding depth, breadth and new mechanics, it’s still Job Simulator that seems to so often sit on the top sellers lists. Does that path suggest Owlchemy lost its magic touch over ensuing releases?

Given what I saw in this tech demo, I think it would be more useful to suggest a different narrative for Owlchemy’s work. In 2017, Google faced a reality in which Meta planned to invest at least $50 billion over half a decade focused around building a platform for VR games with controller tracking. Google buying Owlchemy allowed the company to spend a fraction of that and task its developers with exploring exactly what the limits of tracked controllers are, while also helping prepare for a much larger opportunity.

“We’re here to talk about hand tracking to kind of plant a flag for the industry to say hand tracking is great, it’s already awesome, and this is the target you should be shooting for,” Eiche said. “You are playing the worst version of our hand tracking that you will play because it’s already better at our studio. It’s gonna be even better a year from now. It’s gonna be better as the cameras that were intended to do primarily spatial tracking also start to understand and be built for hands.”

VR’s Next Software Revolution

Meta’s Chris Pruett made clear in a talk at GDC the company didn’t know what kind of mixed reality games would draw in buyers, and Epic Games’ Tim Sweeney made clear his vision for the metaverse is that “VR goggles….are not required.”

Meanwhile, developers using Moscone’s hallways and hotel rooms in San Francisco demonstrated a future in with VR headsets power a new class of mixed reality gaming experience. Titles like Spatial Ops from Resolution Games and Laser Dance from Thomas Van Bouwel cut across reality in sharp, crisp lines, making use of two or more places at once in ways developers never could before. These demos showed that developers who’ve pushed through VR’s limitations to this moment are poised to surprise a whole generation — and potentially hundreds of millions of people — with VR-powered games.

“If Xbox can sell $129 elite controller, controllers aren’t going anywhere, but…we have to meet the rest of the world where they are,” he told me. “We need to think about the mass market in general. So what [hand tracking is] going to do to VR is it’s…going to further open it up much in the way that the Quest opened it up.”

“VR is in a software problem, not a hardware problem,” Eiche said. “We need to start thinking about pushing further on the software end because the headsets, the next generation, the generation after, are getting good enough, much like the mobile phones did at their time, where it’s a question of can we build it? Not if we can build it…everybody starts from zero. They start with two hands picking things up. And there haven’t been these kind of coalescing of standards…steal our stuff, use our interaction….the future is bright and even with the software stack and the hardware we have now, there’s still so much more uncharted territory. And the other thing, and this is not to take a shot at Tim Sweeney. Tim Sweeney has done genius things that like I could never imagine, but it’s very easy to sound smart and talk shit. It’s very easy. It’s much, much harder to sound smart and actually put the work in to make something succeed.”

From Tomato Presence To Squishy Pills

I tried Owlchemy’s demo twice. The first time I just wanted to assess whether it felt like Job Simulator, and it passed that test with flying colors.

The second time I examined the technical details.

“Tomato presence, when you pick up the object, your hand disappears and is replaced by just the object that exists in Vacation Simulator,” Eiche says about their previous work. “But because hand tracking is so viscerally connected to your hand, unlike holding a controller where you just had this very binary state, pick up or drop, we actually leave the hand visual in and we try to adapt both the hand visual and the object you’re picking up to match.”

Through endless testing and iteration, Owlchemy’s demo shows how they’ve closed the gap between today’s passable hand tracking and satisfying, reliable, interactions. Some of Owlchemy’s core magic here is hidden inside stretchy pills outlining the shape of the hands.

“The tips of your fingers, whether or not you noticed that, your fingers stretched,” Eiche explained. “There’s a large tech art gap between making a bunch of segmented finger things and making some singular cohesive thing. And so we are looking at that from an R&D perspective, but we chose the pills because it works really well.”

I reached toward a dial and pinched together my fingers. Just as I felt the haptic feedback of their touch against one another my pill-fingers seemed to clamp onto the dial. I moved my arm toward my body and watched the furthest pills stretch their connection with the dial before finally snapping away.

“I think your fingers are always gonna stretch a little bit. It’s all in the subtlety of it, right? The thing is you’re grasping at air for lack of a better term. So you don’t have a physical switch to hold onto,” Eiche explains. “So we have to have some acceptable zone which counts as you using the thing. And on the keyboard, if you go back and put your hands there, the keys actually snap to your fingers the opposite way. If you put your fingers near the bubble, they move to your fingers to stay within your hand within a certain area. The letters always show through your hands, so you never block out parts of the keyboard.”

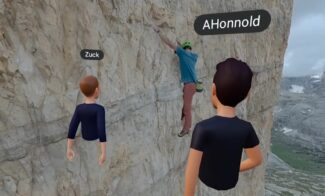

We can’t wait to see the multiplayer hand tracking demo the company is working on still behind closed doors. What I saw at GDC 2023, though, was impressive enough to make me believe more game developers than I expected are going to open VR up wider than Quest’s Touch controllers by simply letting you grasp at air.

“There’s always genres that aren’t perfectly playable, but the funny thing about games is, is that designers are geniuses,” Eiche says. “And they figure out ways to make genres work. You tell me the most competitive shooter and most popular competitive shooter in the world, the majority of players are playing on their phones. Think about that 10 years ago, right? Fortnite? Phones. Call of Duty? Phones, Call of Duty mobile. These are huge, huge games. And they’re playing ’em on a platform that when iPhone [3GS] came out, you would’ve been like, well, it just can’t do shooters.”

Owchemy’s demo made a believer out of me. Google could unleash the company’s insights on the user interface for its follow up to Daydream, or offer frameworks to developers allowing them to start with an impressive set of building blocks for hand tracking. Or Owlchemy could simply just release its hand tracking multiplayer game as an example to others.

Perhaps most importantly, as Eiche explains it, Touch controllers should improve further with hand tracking layered on top.

“It doesn’t preclude any other input system. The craziest thing about hand tracking is it’s going to make controller tracking better because we’ll be able to track your hands while you’re holding the controller,” Eiche said. “And what’s to stop you then from peripherals? Not even having to be smart peripherals. What’s stopping, you know, Ryan at Golf Plus from making a golf club that’s cut off and you hold that to swing, or a paintbrush, instead of having to like figure out how a paintbrush works and you’re a painter, you pick up a real paintbrush cuz why not? And you just tap that into the world and say, all right, this is gonna work. And then you paint.”