Stereoscopic color passthrough, spatial anchoring, and scene understanding will deliver more realistic mixed reality experiences.

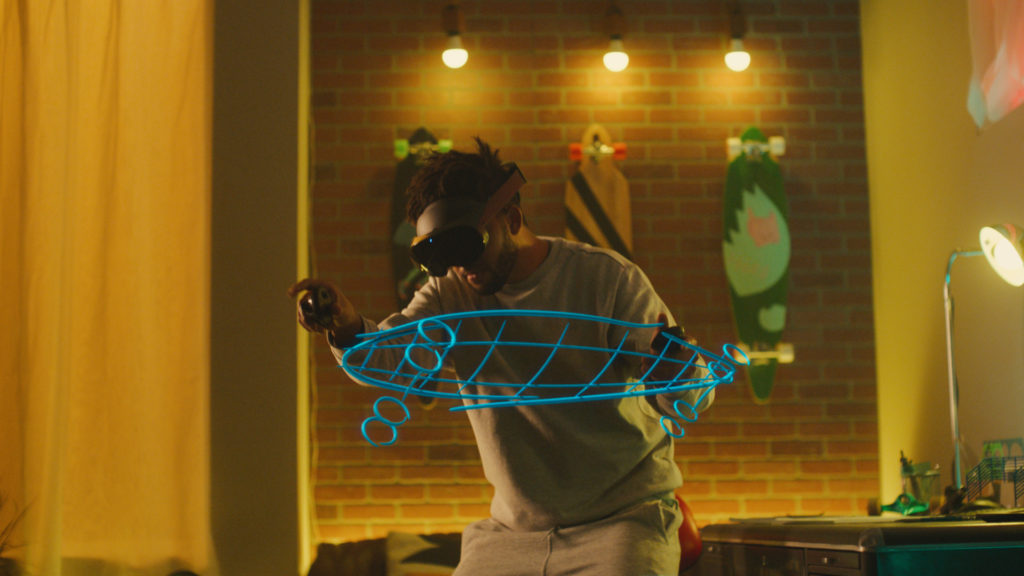

According to Sarthak Ray, a product manager at Meta, the ability to see and interact with the world around you through mixed reality opens up new opportunities for VR. “You can play with friends in the same physical room or productivity experiences that combine huge virtual monitors with physical tools, and it’s a step toward our longer-term vision for augmented reality.”

Contrary to popular belief, a mixed reality experience is most effective if the VR/AR device can convincingly blend the physical and virtual worlds. This means that the headset has to do more than just provide a 2D video feed, according to a recent blog post on Oculus.com.

To further its commitment to developing cutting-edge VR technologies, Meta has announced the launch of Meta Reality, a new mixed reality system that offers an inside look at what goes into creating an exceptional experience.

Stereoscopic Color Passthrough for Spatial Awareness

Meta recognizes the importance of color-passthrough and stereoscopic 3D technology when it comes to delivering comfortable and immersive mixed reality experiences.

“Meta Quest Pro combines two camera views to reconstruct realistic depth, which ensures mixed reality experiences built using color Passthrough are comfortable for people,” explained Meta Computer Vision Engineering Manager Ricardo Silveira Cabral. “But also, stereo texture cues allow the user’s brain to do the rest and infer depth even when depth reconstruction isn’t perfect or is beyond the range of the system.”

For example, when using Passthrough, your brain learns that the coffee cup is about as far away from your hand as it is from the pen that’s sitting right next to you.

Oculus Insight, which could only capture 100 interest points to determine the headset’s position in a room, was a technology that provided the first standalone tracking system for a consumer VR device. It has since been upgraded to enable deep sensing. For comparison, Meta Quest Pro can produce up to 10,000 interest points per second under different lighting conditions, allowing you to create an improved 3D representation of your physical space all in real time.

Through this process, Meta is able to create a 3D model of the physical world that’s continuously updated. You can then use this data to create a predictive rendering framework that can produce images of your real-world environment. To compensate for the latency of rendering, the reconstructions are adjusted to the users’ left and right eye views through the Asynchronous TimeWarp algorithm.

According to Silveira Cabral, the use of a color stereoscopic camera allows the team to deliver a more realistic interpretation of the real world.

Scene Understanding for Blending Virtual Content Into the Physical World

The Scene Understanding component of the Presence Platform was introduced during the Connect 2021 event. This technology enables developers to create complex and scene-aware mixed reality experiences. According to Wei Lyu, the product manager of Meta Quest Pro, the company’s Scene Understanding component was designed to help developers focus on building their businesses and experiences.

“We introduced Scene Understanding as a system solution,” said Lyu.

Scene Understanding is broken up into three areas:

- Scene Model – A single, comprehensive, up-to-date, and system-managed representation of the environment consisting of geometric and semantic information. The fundamental elements of a Scene Model are Anchors. Each Anchor can be attached to various components. For example, a user’s living room is organized around individual Anchors with semantic labels, such as the floor, ceiling, walls, desk, and couch. Anchors are attached with a simple geometric representation: a 2D boundary or a 3D bounding box.

- Scene Capture – A system-guided flow that lets users walk around and capture their room architecture and furniture to generate a Scene Model. In the future, the goal will be to deliver an automated version of Scene Capture that doesn’t require people to manually capture their surroundings.

- Scene API – An interface that apps can use to access spatial information in the Scene Model for various use cases, including content placement, physics, navigation, etc. With Scene API, developers can use the Scene Model to have a virtual ball bounce off physical surfaces in the actual room or a virtual robot that can scale the physical walls.

“Scene Understanding lowers friction for developers, letting them build MR experiences that are as believable and immersive as possible with real-time occlusion and collision effects,” added Ray.

Spatial Anchors For Virtual Object Placement

Developers can easily create first-class mixed reality experiences using Spatial Anchors, which is a core capability of the Meta Quest Pro platform. For instance, a product designer can easily anchor multiple 3D models throughout a physical space using a platform like Gravity Sketch to create a seamless environment for their product.

“If stereoscopic color Passthrough and Scene Understanding do the heavy lifting to let MR experiences blend the physical and virtual world, our anchoring capabilities provide the connective tissue that holds it all together,” said Meta Product Manager Laura Onu.

Together with the Scene Model, Spatial Anchors can be used to create rich and automatic environments for a variety of experiences and situations. For instance, you can create a virtual door that’s connected to a physical wall. This would be a huge step for companies looking at XR technology for their enterprise solutions in digital twinning, warehouse automation, and even robotics.

For now, it seems like Meta is more focused on social experiences. “By combining Scene Understanding with Spatial Anchors, you can blend and adapt your MR experiences to the user’s environment to create a new world full of possibilities,” according to Onu, who adds, “You can become a secret agent in your own living room, place virtual furniture in your room or sketch an extension on your home, create physics games, and more.”

Shared Spatial Anchors For Co-Located Experiences

In addition, Meta has added the Shared Spatial Anchors feature to the Presence Platform. This allows you to create local multiplayer experiences by sharing your anchors with other users in the same space. This would allow you and other friends to play a VR board game on top of a physical table in the same way that Tilt Five delivers their gaming experiences.

A Winning Combination for Mixed Reality

The combination of Spatial Anchors, Passthrough, and Scene Understanding can help create a rich and interactive environment that’s designed to look and feel like the real world. Avinav Pashine, product manager of Meta Quest Pro, noted that there are many trade-offs involved in creating a great and comfortable mixed reality environment for users.

The future of Meta Reality is still evolving as the company continuously improves the platform through software updates and the hardware innovations that will be delivered in the next generation of their products. Silveira Cabral, product manager of Meta Quest Pro, noted that the company’s first product is an important step in the evolution of Meta Reality.

“We want to learn together with developers as they build compelling experiences that redefine what’s possible with a VR headset. This story’s not complete yet—this is just page one.”

Image Credit: Meta

The post Meta Unveils Its Meta Reality Mixed Reality System appeared first on VRScout.