Meta research suggests VR’s most transformative gains in telepresence and visual realism may come from advances in display brightness and dynamic range.

Speaking on Meta CTO Andrew Bosworth’s podcast, the company’s head of display systems research talked about the enormous gap in brightness between the 100 nits provided by Meta’s market-leading Quest 2 headset and the more than 20,000 nits provided by its Starburst research prototype. The latter can match even bright indoor lighting while far exceeding today’s highest performing high-dynamic range (HDR) televisions, which top out at around 1,000 nits.

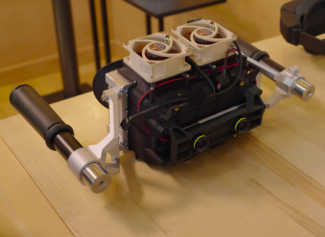

Douglas Lanman, Meta’s top display researcher, referred to this gap as what “we most want, but can least deliver right now.” The prototype is so heavy at 5 to 6 pounds with heat sinks, a powerful light source and optics, that looking into Starburst comfortably requires it be suspended from above and held to the face by handles. While we know Sony’s PlayStation VR 2 display will bring HDR to consumer VR for the first time, its exact brightness and dynamic range is unknown.

“You mentioned that you sort of feel your eye responding to it a certain way,” Meta Research Scientist Nathan Matsuda told Tested’s Norman Chan when he tried Starburst. “We know that there are a variety of perceptual cues that you get from that expanded luminance, and part of that is due to work that was done for the display industry for televisions and cinema, but of course when you have a more immersive display device like this where you have wide field of view, binocular parallax and so on, we don’t know if the perceptual responses actually map directly from the prior work that had been done with TVs, so one of the reasons we built this to begin with is so we can start to unravel where those differences are, where the thresholds might be where you start to feel like you’re looking at a real light instead of a picture of a light, which will then eventually lead us to being able to build devices that then content creators can produce content that makes use of this full range.”

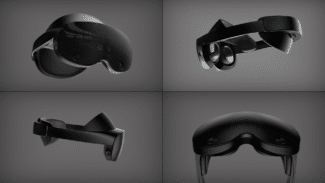

For those who missed it, Meta offered an unprecedented look at its prototype VR headset research this week paired with the announcement of a goal to one day pass the “visual Turing test“. Passing the test would mean making a VR headset with visuals indistinguishable from reality. On Bosworth’s podcast, Boz to the Future, Lanman detailed the challenges in advancing VR displays toward this goal in four ways — resolution, varifocal, distortion correction, and HDR — with the last described as perhaps the most challenging to fully achieve.

Lanman:

In these [Starburst] prototypes we’ve built, you look at a sunset… And if we wanna talk about presence, you feel like you’re there. You’re on Maui, looking out at the sun going down and it sets the hairs on your neck up.

So this is the one we most want, but can least deliver right now. Where we’re at is just running studies, to determine what would work? How could we change the rendering engine? How could we change the optics and displays to give us this? But high dynamic range, that’s the fourth, perhaps king of them all.

The Starburst prototype, pictured below, demonstrated an implementation of extremely bright visuals in VR with high dynamic range (HDR), which Meta CEO Mark Zuckerberg described as “arguably the most important dimension of all.”

While Starbust’s brightness significantly improves the sense of presence and realism, the current prototype would be “wildly impractical” to ship as a product, as Zuckerberg put it. If you haven’t dived into it yet we highly recommend making the time to watch Tested’s full video above as well as listening to the podcast with Lanman and Bosworth embedded below. As Meta’s CTO said, the prototypes “give you the ability to reason about the future, which is super helpful because it lets us focus.”

We also reached out over direct message to Norman Chan at Tested because his exclusive look at the hardware prototypes, and the comment he made to Zuckerberg that Starbust was “the demo I didn’t want to take off,” suggests HDR is likely to be a critical area of improvement for future HMDs. Where the gap between Quest 2’s angular resolution and the “retinal” resolution of the Butterscotch prototype is 3x, the gap between Starburst’s brightness and a Quest 2 is almost 200x, meaning there’s a larger chasm to cross in brightness and dynamic range before being able to match “pretty much any indoor environment,” as Lanman said of Starburst.

“The qualitative benefits of HDR were striking in the Starburst prototype demo I tried, even though the headset’s display was far from retinal resolution,” Chan wrote to us. “Getting to something like 20,000 nits in a consumer headset is going to be a big technical challenge, but I could see incremental improvements in luminance through efficiencies in display panel transmittance. What excites me is that producing HDR imagery isn’t computationally taxing–there’s so much existing media with embedded HDR metadata that will benefit in HDR VR headsets. I can’t wait to replay some of my favorite VR games remastered for HDR!”

UploadVR News Writer Harry Baker contributed to this report.