Electronic Head Actuation will have you turning the other cheek, whether you like it or not.

A recently published paper from the University of Chicago shows how researchers are able to use electronic muscle stimulation (EMS) to manipulate a person’s head and assist them during VR and AR (augmented reality) experiences.

The process, known as Electronic Head Actuation, works by stimulating specific neck muscles in a way that involuntarily tilts (cervical lateral flexion) and turns (cervical rotation) your head left and right as well as up (cervical extension) and down (cervical flexion).

In a compilation video released by the research team, you can see a person wearing a Microsoft Hololens mixed reality headset extinguishing an AR fire using a real-world fire extinguisher. To assist him, an observer in the real world uses a Playstation controller to send signals to the four main muscle groups in their neck to turn the user’s head and guide them to the fire extinguisher.

Later in the video, we see a person wearing a Meta Quest VR while another person pilots a drone fitted with a camera. The pilot can control the neck movements of the VR user using the drone, allowing them to sync their perspectives.

During an interview with VRScout, Yudai Tanaka, a Ph.D. student in the Human Computer Integration Lab at the University of Chicago and one of the researchers on the Electronic Head Actuation team, talked about the program saying, “We strive to solve problems in human-computer interfaces. The one really hard problem is how to miniaturize strong actuators that can move your body—like robotic exoskeletons.”

However, robotic exoskeletons are large and require a lot of battery power in order to operate. As Tanaka says, “exoskeletons are large, heavy, and cumbersome.”

Tanaka and the team looked at how they could apply mechanical forces and push against the user’s physical body, causing it to move, without putting the user in danger. By using EMS, the team was able to manipulate the user’s head without an exoskeleton, and through Electronic Head Actuation, the team was also able to create a haptic force feedback experience in the user’s neck for AR and VR experiences.

One idea Tanaka is especially excited about is having two different users share the same head movements simultaneously in both the physical world as well as VR/AR. With a more robust full-body EMS control system, you could possibly synchronize two user’s over a distance and connect them through the metaverse via an AR or VR device.

One person could remotely control another human to move their head, arms, and legs, creating a sort of human puppet. The AR or VR headset would allow the other person to see what the person on-site is seeing. During one demo, the team showed how they could control someone’s hand as they played guitar.

In regards to what’s possible now, Tanaka talked more about the fire extinguisher experience referenced earlier saying, “We think there’s something very different in our way of moving someone’s attention to the fire extinguisher. Imagine trying to use a smartphone or AR glasses to locate a fire extinguisher during an emergency, likely the AR glasses would render an AR arrow pointing you to the location of the fire extinguisher. While this is also effective, meaning you will eventually find the fire extinguisher, it requires more steps.”

Those steps are:

- You need to look at the arrow

- Understand the transformation you are seeing

- Convert it into your own head movements.

“Our approach is different from others in that we don’t present any information to the user such as visuals and sounds, instead we directly make the user look at the object, which requires only one step,” said Tanaka. The objective is having the user “learn the muscle memory needed to act in this situation, rather than resorting to extra tools that might not be available or involve too many steps.”

In order to explore other opportunities with their work, the team extended their system to Unity developers, allowing them to import 3D objects to be part of their work. This will allow developers to assign a static target such as an AR house or tree with a trajectory target like a moving car, animal, or arrow. This also opens up the possibilities with MR, VR, AR, and mobile tracking using an end-to-end latency over Wi-Fi.

As for Tanaka and the rest of the team—Jun Nishida, a postdoc in the Human Computer Integration Lab at UChicago, and Pedro Lopes, Faculty at the Dept. of Computer Science at the University of Chicago, director of the Human Computer Integration Lab—their work continues.

“We hope our work will inspire researchers to further delve into this avenue of directly assisting the user’s head movement or point of view. While we see many techniques to indirectly achieve this, like showing arrows to the user, we think some applications might require more direct ways to achieve this, such as our fire training application.”

For a much deeper and more thorough dive into Electronic Head Actuation, you can read the team’s report here.

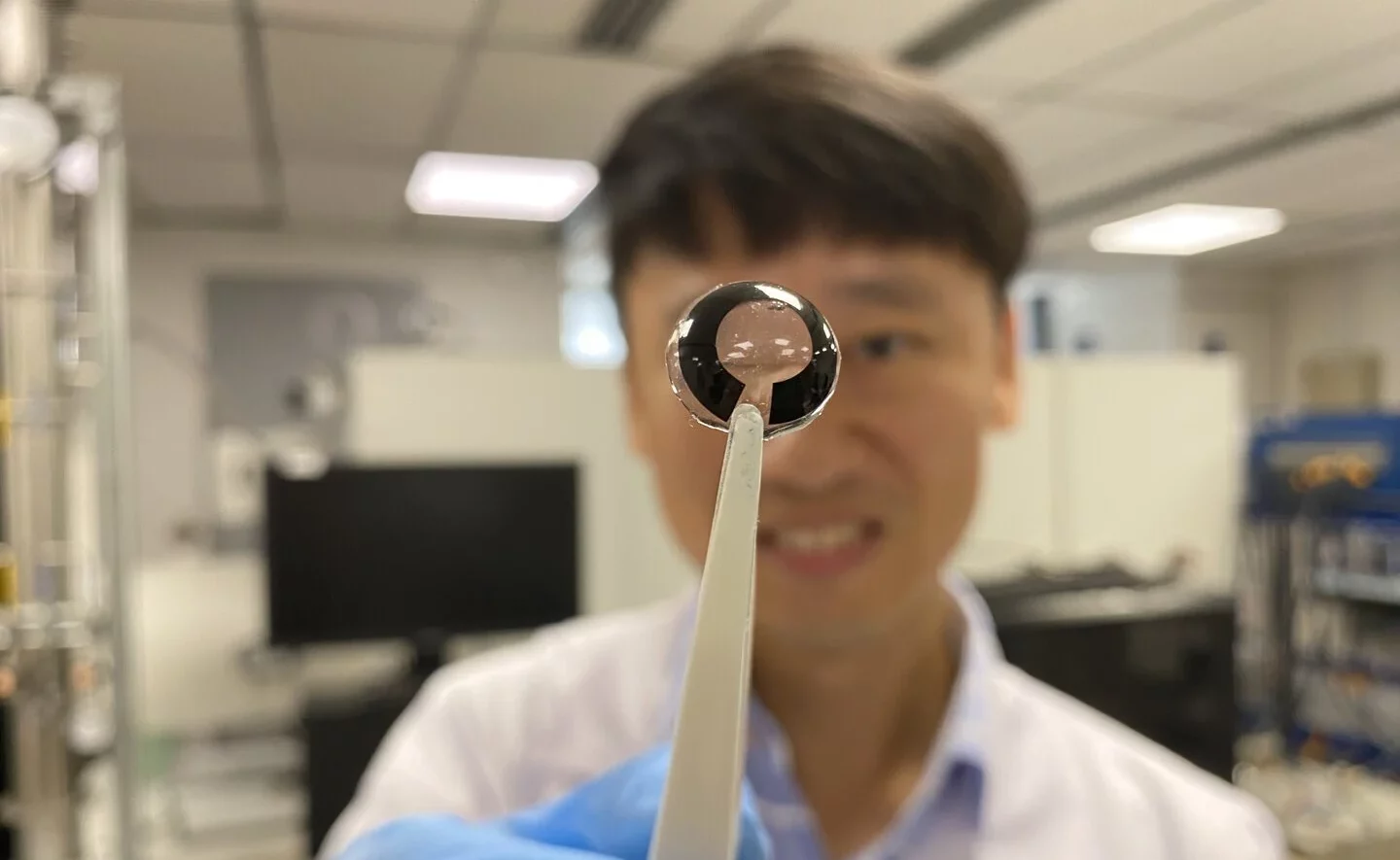

Image Credit: Yudai Tanaka, Jun Nishida, Pedro Lopes

The post Researchers Use VR/AR Tech To Control The Human Body appeared first on VRScout.