Developers can now add Meta’s speech recognition to Oculus Store & App Lab apps, and can experiment with mixed reality spatial anchors.

Voice SDK & Spatial Anchors were both announced at Connect 2021, and are now available as part of the Oculus SDK v35 release.

Voice SDK is powered by Wit.ai, the voice interface company Meta acquired in 2015. Wit is server-side, so won’t work offline and has some latency.

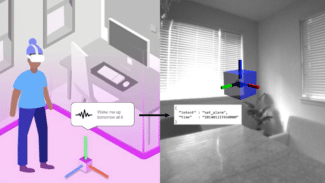

Meta says this new API enables searching for in-app content and “voice driven gameplay” such as verbal magic spells or a conversation with a virtual character. While last month’s Oculus SDK release included Voice SDK, it was marked ‘experimental’ so couldn’t be included in Oculus Store or App Lab apps.

Voice SDK can actually do more than just return text from speech. It has natural language processing (NLP) features to detect commands (eg. cancel), parse entities (eg. distance, time, quantity) and analyze sentiment (positive, neutral, or negative). You can read the Voice SDK documentation here.

Spatial Anchors is a new experimental feature for mixed reality, meaning it can’t be used in Store or App Lab apps yet.

Developers can already use passthrough – the view from the Quest’s greyscale tracking cameras – as a layer (eg. the background) or on a custom mesh (eg. a desk in front of you). Spatial Anchors are world-locked reference frames allowing apps to place content in a specific position users define in their room. The headset will remember these anchor positions between sessions. You can read the documentation for Spatial Anchors here.

Next year Meta plans to add Scene Understanding as an experimental feature. Users will be prompted to (manually) mark out their walls and furniture, and to enter their ceiling height – a process called Scene Capture. This will need to be done for each room but the headset should remember the Scene Model between sessions.